Generative AI has already demonstrated significant potential in robotics. Its applications span various domains, including natural language interactions, robot learning, no-code programming, and design. This week, Google’s DeepMind Robotics team is highlighting another promising intersection between AI and robotics: navigation.

In their latest paper titled “Mobility VLA: Multimodal Instruction Navigation with Long-Context VLMs and Topological Graphs,” the team reveals how they have utilized Google Gemini 1.5 Pro to train a robot to follow commands and navigate an office environment. DeepMind employed some of the Every Day Robots, which have been in use since Google shut down the project amid widespread layoffs last year.

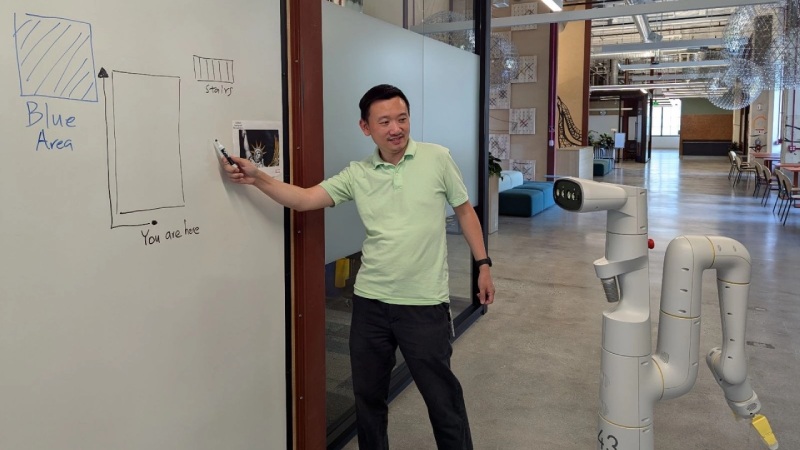

The project includes several videos where DeepMind employees begin by saying “OK, Robot,” similar to a smart assistant activation, and then instruct the system to perform various tasks in the 9,000-square-foot office. In one instance, a Googler asks the robot to take him to a place where he can draw. The robot, sporting a jaunty yellow bowtie, replies, “OK, give me a minute. Considering Gemini… and then directs the person to a huge whiteboard. In another video, a different person instructs the robot to follow the directions on the whiteboard.

A simple map guides the robot to the “Blue Area.” After pausing for a moment, the robot takes a long route, eventually arriving at a robotics testing area. The robot then confidently announces, “I’ve successfully followed the directions on the whiteboard,” exhibiting a level of self-assurance most humans could only wish for.

Before these demonstrations, the robots were familiarized with the office space through a method called “Multimodal Instruction Navigation with demonstration Tours (MINT).” This process involves guiding the robot around the office while pointing out different landmarks using speech. The group then combines environmental awareness with common sense reasoning via hierarchical Vision-Language-Action (VLA). This integration enables the robot to respond to written and drawn commands as well as gestures.

By merging these advanced AI techniques, DeepMind is pushing the boundaries of what robots can achieve in terms of autonomous navigation and task execution in real-world environments. The combination of generative AI and robotics is paving the way for more sophisticated and intuitive robotic assistants in various settings.